While it's said that practice makes perfect I don't want my CPU hogged when I visit a site. With Firebug we can avoid these vicious scripts.

I dislike when people waste my CPU and I really dislike the "slow script dialog". I have a few blacklisted sites because all the animations and scripts makes it impossible to even scroll the pages smoothly. I certainly don't want my sites to be like this. I just came across a very slow script on one of my pages and I will use this as a case study.

The problem

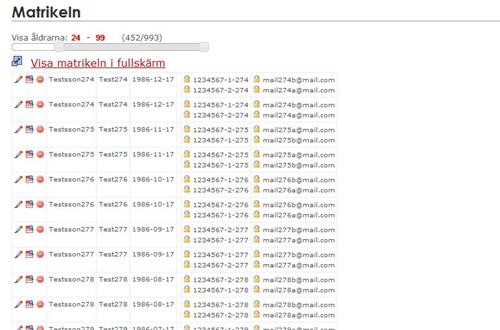

I'm building a new website for my orienteering club. One of the features is a list of members with phonenumbers and emails and some other stuff. Because of historic reasons all ~500 members are shown at the same time. This naturally causes a slow page load. To counter this I'm putting all the filters i javascript. My testpage looks like this

As you can see there is 993 rows like this. I'm testing with twice the data I excpect later to make sure it will be decent to use.

The filter runs when you stop dragging. Even with 1000 rows the complexity is fairly low. Pretty much O(n) in this case wich shouldn't be a problem. To my suprise the script caused FF to scream in agony. It caused a ~3 second freeze. My first thought was to ignore this as it wasn't that bad. But that would be a real misstake because if I notice lag the users will certainly have lags.

Tip #1: If you are noticing slow scripts or slow rendering it will be unbearable for most regular users. As a dev you are probably sitting on a decent machine. It it has to struggle you can be sure that people with a 5 year old laptop will have serious problems on you site.

Here is the function that did the filtering:

var filter = function (filterState) {

var total = 0;

var ageIncl = 0;

$('#matrikel tr').each(function () {

total++;

var elem = $(this);

var show = true;

var age = elem.attr('data-age');

if (filterState.ageFrom <= age && age <= filterState.ageTo) {

ageIncl++;

} else {

show = false;

}

$('#ageResult').html(ageIncl + '/' + total);

if (show) {

elem.show();

} else {

elem.hide();

}

});

}

Step 1: Profiling

Before starting to optimze you should get some numbers on how bad your current algorithm really is. Why? Because of several reasons.

- You don't want to spend time optimizing the wrong part

- You want to be able to compare your new implementation with the old one

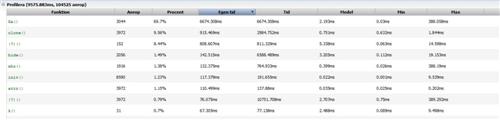

If you do the pre profiling it will be much easier to plan your work and know when you are ready. More on that later. Here is my pre profiling:

This is a capture from Firebug. You can access the profiler under the Console tab. Some things to note is that it is very hard to understand which function it is refering to. But you have a link(outside this capture to the code for each entry). The last (?)() is the loop in my filter. As you can see it has a high total time(Tid) but a low time if we exclude sub calls(Egen tid), All the other functions are inside jquery.

This told me that my filtering is not the slow thing but rather how I hide/show items.

Step 2: Try to optimize

So my theory is that the hiding / showing is slow. First thing to notice is that I placed the printing of the result inside the loop. That is a waste of resources and can be moved out of the loop. The code I refer to is:

$('#ageResult').html(ageIncl + '/' + total);

Being a bit lazy I didn't profile the page after this change. I probably should have but I really doubt that was the slowest part. I had my attention on this block

if (show) {

elem.show();

} else {

elem.hide();

}

So what if the let the CSS engine do the work instead? I have a class 'hidden' that sets display:none; so why not use this? I changed to:

var filter = function (filterState) {

var total = 0;

var ageIncl = 0;

$('#matrikel tr').each(function () {

total++;

var elem = $(this);

var show = true;

var age = elem.attr('data-age');

if (filterState.ageFrom <= age && age <= filterState.ageTo) {

ageIncl++;

} else {

show = false;

}

if (show != elem.is(':visible')) {

elem.toggleClass('hidden');

}

});

$('#ageResult').html(ageIncl + '/' + total);

}

Great! That will run soo much faster.

Step 3: You aren't as good as you think - Profile!

Testing the page I realized I just had made it worse. Atleast that was my feeling. Let's get it in black on white.

That was interesting. The loop is still the second (?)() but notice the filter() function. this is actually not my filter function. But a function inside jquery. Remember the elem.is(':visible'). If I had a better profiler which could show the callstack in the profiler I'm sure that one would cause the filter(). The filter is not very heavy in itself but it's total time match up fairly good with the load() function. So my conclusion is that was a bad idea.

Tip #2: Do not only at if your optimization made it faster or slow. Also look at if you have moved the bottleneck. If you have moved the bottleneck it is a good indication that you are looking at the right part atleast but you need to find another optimization.

With Tip #2 in mind we can see that Ga() which was our earlier bottleneck is no problem anymore. What does that mean? It means that the idea of using the CSS engine is a good one. We are no longer spending a lot of time on hiding/showing. It's just the implementation that is bad.

Step 4: Use your new knowledge and try to optimize again

With the assumption that visibility check is evil the next logical step is to remove that one. A simple rewrite and we have:

var filter = function (filterState) {

var total = 0;

var ageIncl = 0;

$('#matrikel tr').each(function () {

total++;

var elem = $(this);

var show = true;

var age = elem.attr('data-age');

if (filterState.ageFrom <= age && age <= filterState.ageTo) {

ageIncl++;

} else {

show = false;

}

if (show) {

elem.removeClass('hidden');

} else {

elem.addClass('hidden');

}

});

$('#ageResult').html(ageIncl + '/' + total);

}

Ok, so that has to be good. Or has it?

Step 5: Profile again

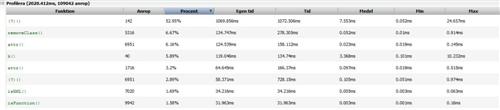

A quick test before profiling again indicated that the problem was now gone but let's look at it anyway.

That truly looks better. The maxtime of one loop iteration, the last (?)(), has gone from 200ms to < 1ms. The average is also showing a lot of improvement. Looking at the relations we can see that there is now a new bottleneck too. Looking at the older profilining shows that that call has been equaly slow the whole time. Indicating that the optimization didn't slow down that part.

The optimization worked and the code that earlier caused a problem is now gone without causing any new issues. A good result by all standards.

Tip #3: Use the you old profiling to see if the newest bottleneck was caused by the optimization or if it has been that slow all the time.

Tip #4: Use the CSS engine whenever you can and when you need better performance. The CSS engine has been a central part of the browsers for a long time and is highly optimized.